· LoroNote · AI · 2 min read

Codex Overview

Codex Overview

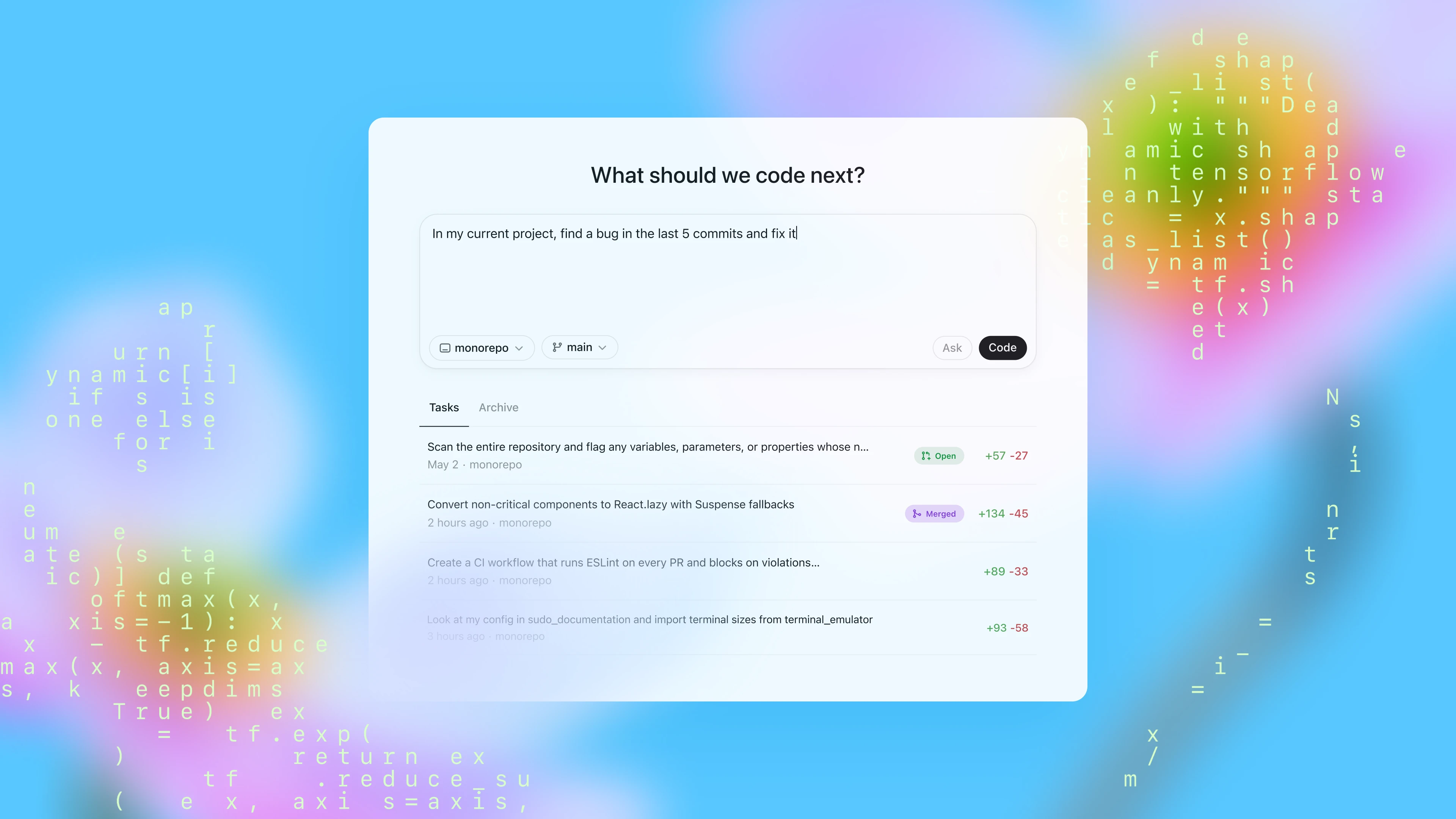

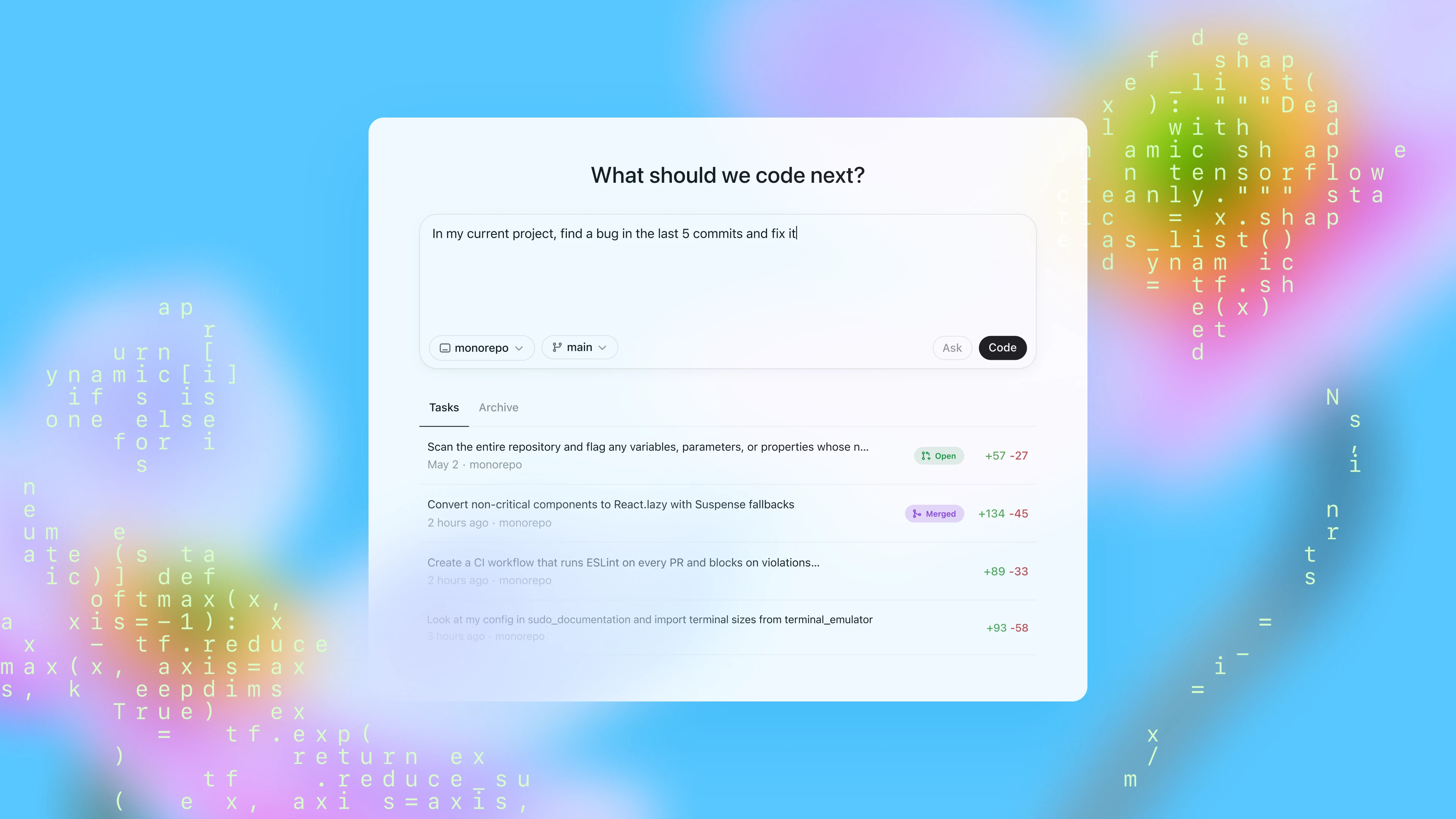

On May 16, 2025, OpenAI introduced Codex as a research preview — a cloud-based software engineering agent capable of handling multiple tasks simultaneously. It is currently being rolled out to ChatGPT Pro, Team, and Enterprise users, and as of June 3, it is also available to Plus users.

Source: OpenAI Introducing Codex

Featured Image

Key Features

1. Cloud-Based Multitasking Agent

- Writes features, answers codebase questions, fixes bugs, and proposes PRs

- Runs entirely in an isolated cloud sandbox

- Provides verifiable evidence such as commits, terminal logs, and test outputs

2. Optimized for Software Engineering

- Powered by the codex-1 model (part of the OpenAI o3 family)

- Trained with reinforcement learning on human-preferred styles

- Configurable through AGENTS.md to control codebase navigation, test commands, and coding style

3. Safety and Transparency

- Executes only in isolated cloud containers

- No external API or website access

- Explicitly reports failed tests or uncertain results

4. Real-World Applications

- Cisco: Helps accelerate innovation

- Temporal: Speeds up development, debugging, and refactoring

- Superhuman: Improves repetitive workflows

- Kodiak: Assists in autonomous driving R&D with contextual support

5. Codex CLI

- Released as an open-source terminal-based agent Codex CLI

- Includes lightweight model codex-mini-latest

- Free API credits provided upon login for Plus/Pro users:

- $5 / 30 days (Plus)

- $50 / 30 days (Pro)

6. Availability & Pricing

- Currently available for Pro, Team, and Enterprise → expanding to Plus and Edu

- Early access: generous free usage

- Later: usage-based billing

- Codex-mini API pricing:

- $1.50 per 1M input tokens

- $6.00 per 1M output tokens

- 75% discount for prompt caching

Example Usage

Here’s a simple example of using Codex CLI:

# Install Codex CLI

npm install -g @openai/codex-cli

# Initialize a new project

codex init my-app

cd my-app

# Add a feature: generate an Express.js server with a GET / endpoint

codex add "Create an Express server with a default GET / endpoint"Share: